How to Boost SMS Survey Response Rates (based on Experiments, A/B Tests)

Optimizing response rates is critical to get reliable and robust survey data, which also applies to SMS or WhatsApp surveys. To get robust insights on ways to increase SMS survey (i.e. text message survey) response rates, we conducted a comprehensive experiment in Kenya. We explored the power of personalization and timing in increasing SMS survey engagement and response rates, and explored how response rates developed over time. Learn how we doubled response rates within five months of survey launch.

The Experiment

Our experiment was designed with a simple idea: make the survey feel personal to increase participation. The first week focused on personalization, using the respondent’s first name in the introduction message. The second week tested the impact of timing, sending messages at either 7 am or 1 pm. We then selected the "winning" option and continued sending surveys weekly, which also allows us to observe how response rates develop over time.

We sent a five question SMS survey to 5181 farmers in Western Kenya in 386 farmer groups. These farmers were previously (1-2 months before) personally contacted and recruited for the SMS surveys. Respondents received a phone credit (airtime), valued at 10 Kenyan Shilling, upon completion of a survey. All survey participants received equal airtime incentives, irrespective of experimental assignment or answers.

Week 1: Calling by Name

We started by personalizing the introductory SMS with the recipient’s first name. Instead of just writing in Swahili Habari (meaning “Hello”), we wrote Habari [FirstName]. For example: Hello Michael, here’s your weekly farmer’s survey (…). This approach is grounded in the principle that seeing one’s name increases trust and involvement.

Week 2: Timing Matters?

Next, we varied the timing of the SMS delivery. The rationale was that people might be more receptive to surveys at certain times of the day, influenced by their daily routines. We compared sending the survey in the morning (7am) to sending surveys around lunchtime (1pm).

Results

Personalization has a large impact on SMS survey response rates

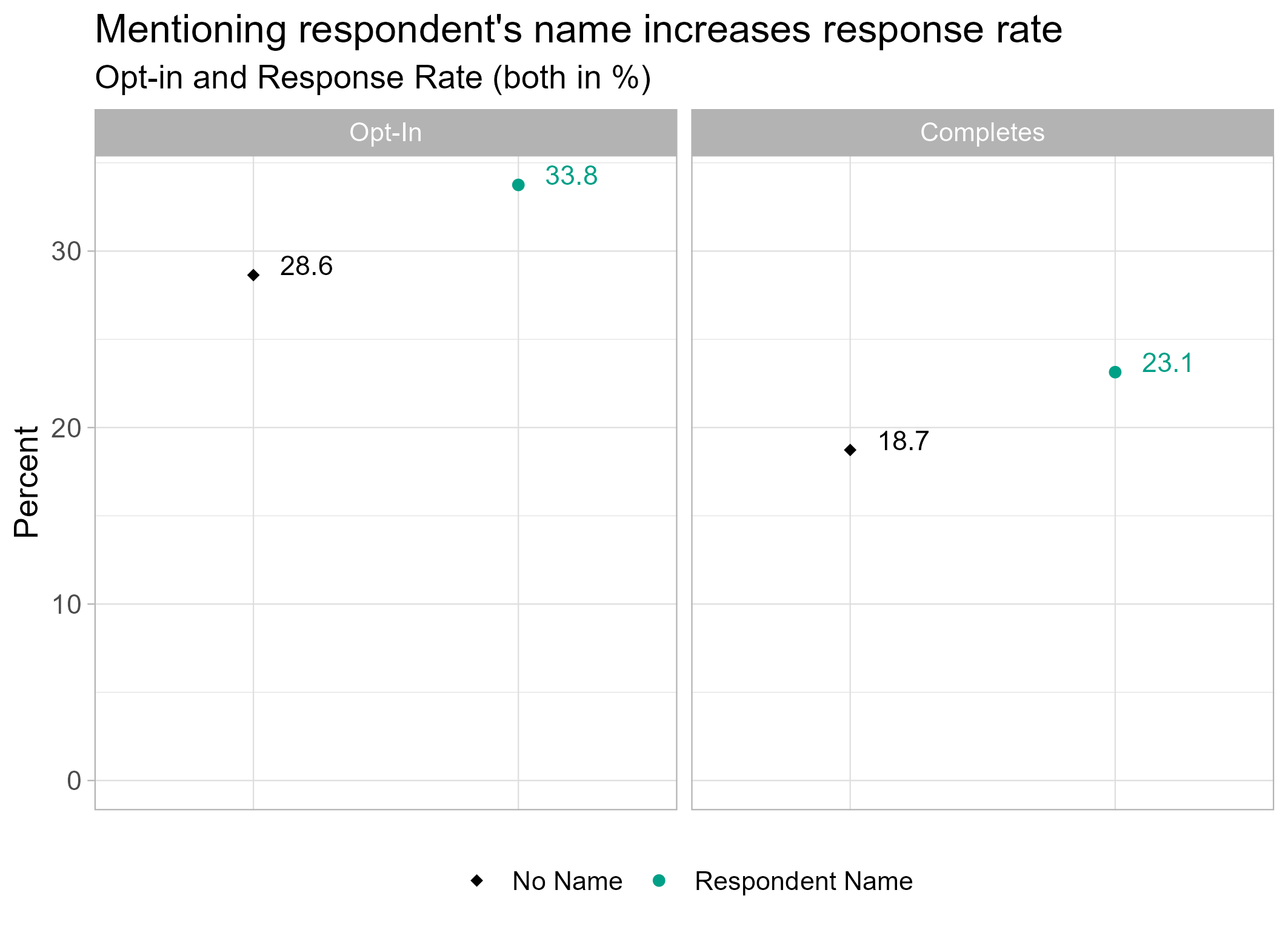

We found that including the respondent’s name in the introduction message (first message) of the text message survey (SMS survey) had a strong, positive effect on response rates. We thereby looked at both opt-in rates (the share of all recipients starting a survey) and response rates (the share of all recipients completing a survey). The latter figure is most relevant as researchers and evaluation professionals prefer completed surveys for their analysis.

- Opt-in Rate: Without the respondent’s name, the opt-in rate was 28.6%. With personalization, it rose to 33%.

- Response rate: Similarly, the response rate increased from 18.7% without the name to 23.1% with the name included. This means a 4.4 percentage points (or 24 percent) increase in total response rate (i.e. total response rate).

These figures underscore the effectiveness of personalization, with a clear uptick in both opt-in and response rates when respondents were addressed by their names.

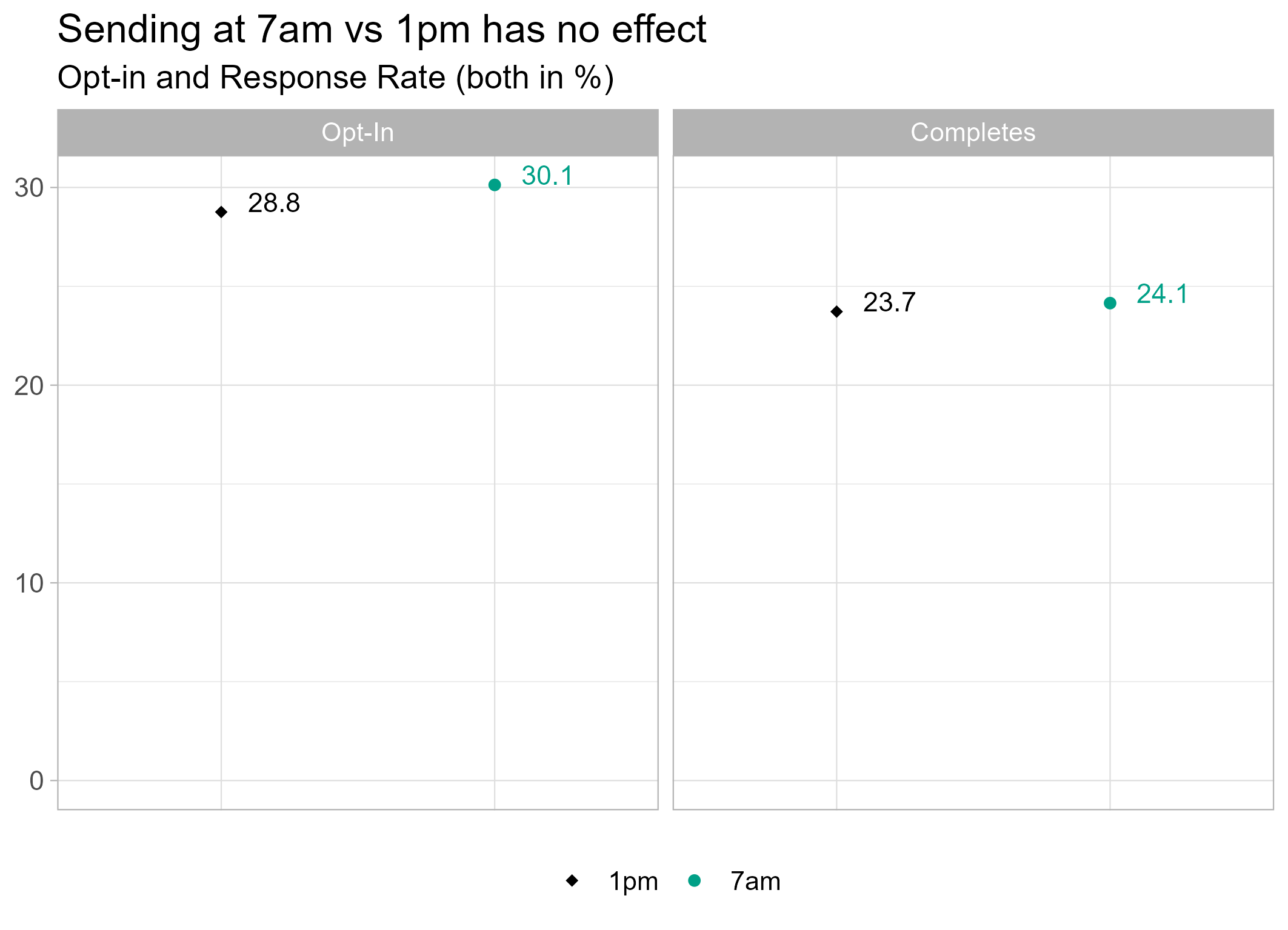

Timing has little influence on SMS survey response rates

In contrast, we did not find statistically significant effects of the timing of the text survey delivery (7 am vs 1pm)

- Opt-in Rate: The opt-in rate was 28.8% at 1pm and 30.1% at 7am.

- Response rate: The response rate was almost the same at 23.7% at 1pm compared to 24.1% at 7am.

While the impact of timing was not significant in this experiment, this may also depend on the specific sample and it can make sense to test this again in other cases.

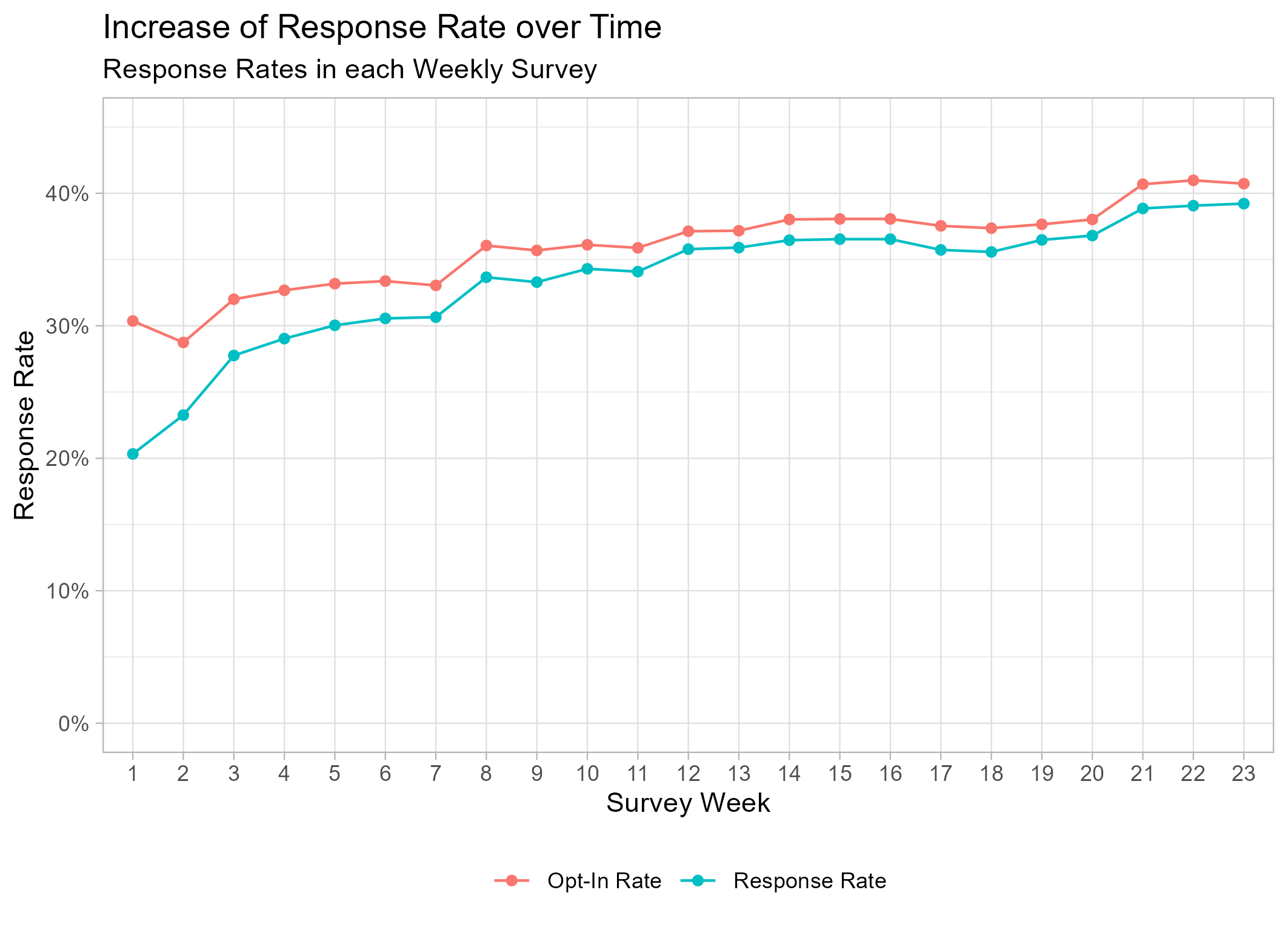

Response rates strongly increase over time

Response rates increase over time – with each additional text message survey, more and more participants join. This is an observation we have made time and time again. Let us illustrate it on the example of the above mentioned Kenya sample.

After the first two weeks of tests, we sent out the surveys with personalization at 1pm every Wednesday. We sent the surveys to all participants, irrespective of whether or not they participate in a previous week. Here’s what we found:

- Opt-in Rate: Within two months, the text survey opt-in rates increased from 30% to 35%, and after five months to over 40%.

- Response Rate: After two months, the SMS survey response rates had increased from 20% to 35%, and after five months of regular SMS surveys, the response rate had doubled from 20% to 40%

Below figure illustrate how opt-in rates (red) and response rates (blue) developed over time.

The finding of consistently increasing response rates suggest that survey participants appreciated the survey, and likely also told other people in their farmer group about it too. These result is also why, at Impaxio, we always recommend to plan SMS surveys continuously and regularly – ideally with a weekly or monthly frequency.

Key Takeaways

- Personalize, personalize, personalize your SMS surveys!

Calling respondents by their name in the introductory message is a “must-have” for all SMS/text message surveys. Additionally, always consider if there are other ways to make your survey feel more personal. For example, mention your organization’s name, maybe mention the village where the person is located. - Consider timing of sending your surveys and test when in doubt.

Results may also depend on the specific sample and it can make sense to test this again in other cases. Always make sure to think about the time and days of the week when your respondents might be best available. - Response rates increase over time! Send SMS surveys regularly.

Response rates increase over time – with each additional survey, more and more participants join. Response rates doubled within five months of regular surveys. This is why we always recommend to conduct mobile surveys continuously and regularly – ideally with a weekly or monthly frequency.

Using the Impaxio platform, you can easily implement all these recommendations in your SMS surveys and also WhatsApp surveys. With easy-to-use features to personalize your survey intro message, and timing the optimal time to release your surveys. Moreover, you can schedule your surveys to be automatically released in your desired time interveal, for example weekly or monthly (recommended) or quarterly.

Don't want to miss out on insights like this? Sign-up for our newsletter here and be the first to know.

Thank you for subscribing!

Have a great day!

Background on Methods used in this SMS survey response rate experiment

To mitigate spatial spillovers, we randomly allocated the treatments by clusters of farmer groups (similar to allocation by villages) and blocked similar groups together. This means that in each farmer group, every person received the same survey condition (e.g. everyone in that group would get the survey at 7am). This ensure a good balance between treatment arms, e.g. in terms of gender and age of survey recipient.

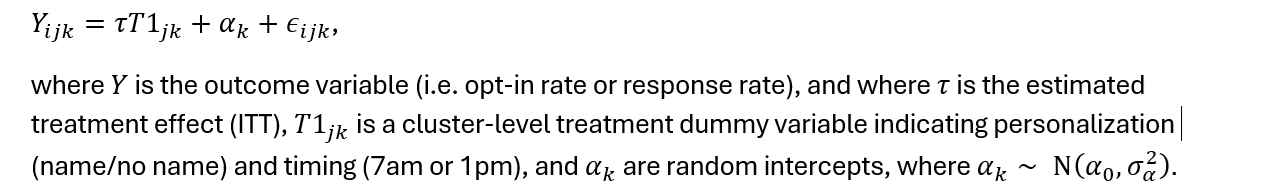

The intent-to-treat (ITT) effect, i.e., the total effect of the treatment on outcomes of interest, irrespective of experimental compliance, was estimated as the unweighted average of within-pair mean differences between treatment and control clusters. Specifically, we estimated seperate mixed-effects models with the following specification for observation in cluster , and block ,

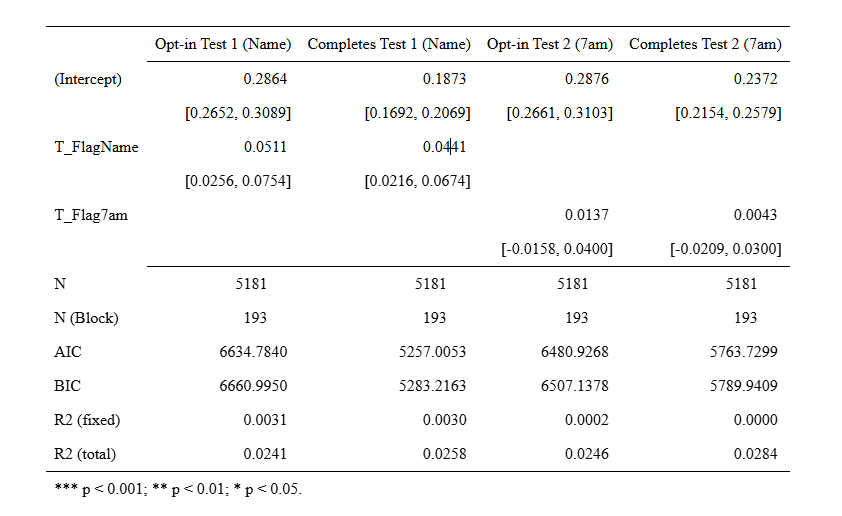

Below table summarizing the regression results (first two weeks). Values in square brackets are bootstrapped confidence intervals (95% level).